AI cameras help prevent collisions by continuously sensing your surroundings in real time, detecting obstacles like pedestrians, vehicles, or objects quickly. They use advanced algorithms, sensor fusion, and machine learning to interpret complex visual data accurately. This enables your vehicle to respond instantly with alerts or braking to avoid dangers before they escalate. As technology improves, these systems are becoming more reliable and capable. Keep exploring to discover how these innovations are shaping safer roads for everyone.

Key Takeaways

- AI cameras analyze sensor data in real time to identify obstacles, pedestrians, and vehicles promptly.

- Sensor fusion combines lidar, radar, and cameras for comprehensive environment awareness.

- Deep learning algorithms interpret complex visual data, improving obstacle detection accuracy.

- Early hazard detection enables immediate alerts and system responses to prevent collisions.

- Proper sensor placement and environmental sensing enhance obstacle recognition in diverse conditions.

H128 Handheld Thermal Camera, 240 x 240 TISR Resolution, 24h Battery Life Thermal Imaging Camera, 25 Hz Infrared Camera with Temperature Alarm, -4℉~ 842℉ Temp Range, IP65 Protection Level

Enhanced Clarity with 240x240 TISR Resolution: Equipped with TISR technology, the H128 infrared camera elevates your thermal imaging...

As an affiliate, we earn on qualifying purchases.

The Role of AI in Modern Safety Systems

Artificial intelligence has become a critical component of modern safety systems, actively enhancing vehicle awareness and reaction times. As you rely more on AI cameras, it’s important to contemplate ai ethics, ensuring these systems operate fairly and transparently. Privacy concerns also come into play, since AI cameras collect vast amounts of data about your surroundings and driving habits. You might worry about how this data is stored, shared, or used without your knowledge. Developers and manufacturers must address these issues by implementing strict data protection measures and clear privacy policies. By balancing technological advances with ethical considerations, AI in safety systems can improve collision prevention without compromising your rights or safety. Enhanced AI security measures are vital to prevent vulnerabilities and ensure the integrity of these safety systems. This responsible approach fosters trust and encourages wider adoption of these life-saving technologies.

HF96V Thermal Camera with Visual Camera & Laser Pointer, Intelligent Scene Detection, 240 * 240 Super Resolution Thermal Imaging Camera,25 Hz, 50° FOV, -4°F to 1022°F, IP54 Infrared Camera

【Dual Cameras】Built-in 96x96 IR camera and 640x480 visible camera, the HF96V thermal imager offers 3 image modes (Fusion/Thermal/Visual)...

As an affiliate, we earn on qualifying purchases.

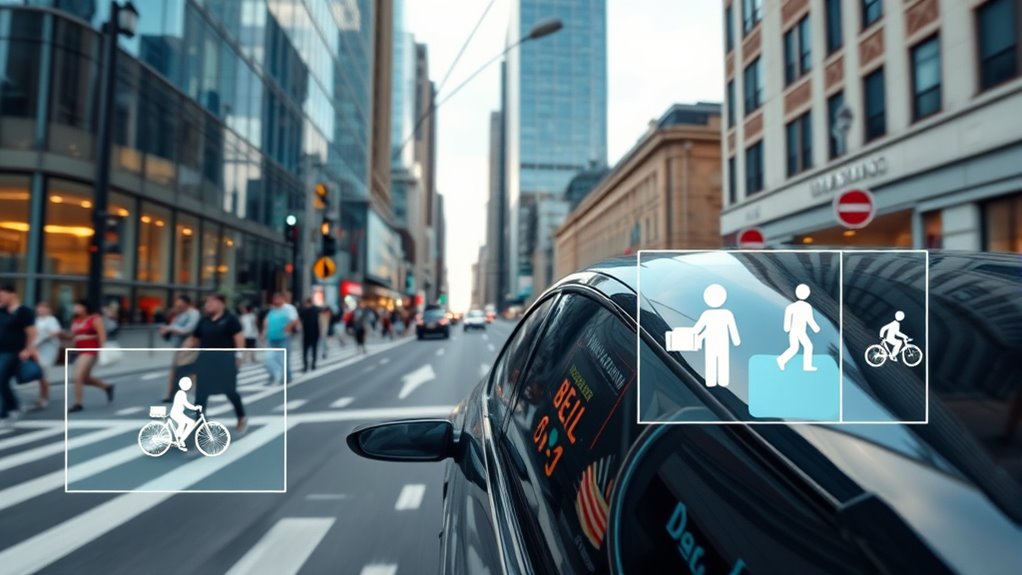

How AI Cameras Detect Obstacles in Real-Time

AI cameras detect obstacles in real-time by continuously analyzing data from their sensors and image processors. They use sensor fusion to combine inputs from multiple sensors—like lidar, radar, and cameras—creating a detailed view of the environment. This integration guarantees more accurate obstacle detection, even in challenging conditions. Edge computing plays a vital role by processing data locally within the camera system, reducing latency and enabling immediate responses. As a result, the system quickly identifies objects, pedestrians, or vehicles that may pose a threat. By leveraging sensor fusion and edge computing, AI cameras deliver swift, reliable obstacle detection, helping prevent collisions before they happen. This real-time analysis forms the foundation for smarter, safer navigation in various applications. Consistent use of technology ensures optimal performance and accuracy in obstacle recognition.

MILESEEY TR10 Thermal Imaging Camera,Super Resolution 192 x 192 Thermal Camera,25 Hz Refresh Rate, -4°F to 1022°F Handheld Infrared Imager,8-Hour Battery Life Infrared Camera

【High Definition Thermal Imaging】The MILESEEY TR10 delivers enhanced 192×192 thermal clarity infrared sensor. Its 25Hz high refresh rate...

As an affiliate, we earn on qualifying purchases.

Advanced Algorithms Powering Obstacle Recognition

Advanced algorithms are the backbone of effective obstacle recognition in AI cameras. They use deep learning techniques to accurately identify objects, even in complex environments. With real-time data processing, these algorithms guarantee your safety systems respond instantly to potential hazards.

Deep Learning Techniques

Deep learning techniques have revolutionized obstacle recognition in collision prevention systems by enabling cameras to interpret complex visual data with remarkable accuracy. You benefit from neural networks that learn to identify patterns and extract features from images, making obstacle detection faster and more reliable. These algorithms improve feature extraction, allowing your AI camera to distinguish between objects like pedestrians, vehicles, or animals, even in cluttered environments. By training on vast datasets, deep learning models adapt to new scenarios, enhancing safety. Additionally, the ability of neural networks to handle complex data is essential for real-world applications, ensuring the system’s robustness and effectiveness.

Real-Time Data Processing

How do collision prevention systems process data instantly to recognize obstacles? They rely on real-time data processing powered by advanced algorithms that minimize data latency. Sensor fusion combines inputs from cameras, radar, and lidar to create a unified understanding of your environment swiftly. This integration ensures quick detection of obstacles, even in complex scenarios.

Feel the confidence as:

- Your vehicle reacts instantly, avoiding accidents

- Data flows seamlessly, despite busy surroundings

- Multiple sensors work together with precision

- Speed and accuracy keep you safe

- Advanced algorithms process info in the blink of an eye

TOPDON TC002C Duo Thermal Camera, Compatible with Any USB-C iPhone, iPad & Android Device, 512 x 384 Super Resolution, 256 x 192 IR Resolution Thermal Imager, -4°F~1022°F Temp Range -Grey

UNIVERSAL USB-C COMPATIBILITY: As an upgraded version of TC002C, the TOPDON TC002C Duo now works seamlessly with all...

As an affiliate, we earn on qualifying purchases.

Integrating AI Cameras Into Autonomous Vehicles

Integrating AI cameras into autonomous vehicles allows for real-time obstacle detection, helping you react quickly to changing conditions. Combining camera data with other sensors enhances the vehicle’s understanding of its environment, reducing blind spots. These advancements lead to safer driving experiences by implementing more effective safety measures.

Real-Time Obstacle Detection

Real-time obstacle detection is a critical component of autonomous vehicle safety, enabling AI cameras to identify and respond to potential hazards instantly. Proper sensor placement guarantees exhaustive coverage, allowing your vehicle to see obstacles from all angles. Advanced obstacle classification helps distinguish between pedestrians, animals, and static objects, reducing false alarms and improving responses. With rapid processing, your vehicle can brake or steer away in milliseconds, preventing collisions before they happen. Feel the confidence as AI cameras constantly monitor your surroundings, adapting to changing conditions seamlessly. Additionally, understanding the contrast ratio of camera sensors can enhance image clarity in varying lighting conditions, further improving obstacle detection accuracy.

Sensor Data Integration

Seamlessly combining AI cameras with other vehicle sensors is essential for creating an all-encompassing understanding of the surroundings in autonomous driving. Sensor fusion integrates data from cameras, lidar, radar, and ultrasonic sensors, providing a thorough view of the environment. This process relies on data synchronization, ensuring that information from different sensors aligns accurately in time and space. You benefit from more reliable obstacle detection and better decision-making, as the system cross-verifies inputs to reduce errors. Effective sensor data integration minimizes blind spots and enhances object recognition, even in challenging conditions like low light or adverse weather. By harmonizing these data streams, autonomous vehicles can respond swiftly and safely, preventing collisions and ensuring smooth navigation. Additionally, incorporating advanced Honda Tuning techniques can optimize vehicle performance, further supporting safe and efficient autonomous operation.

Enhanced Safety Measures

Building on the foundation of sensor data integration, incorporating AI cameras into autonomous vehicles substantially boosts safety measures. Through advanced sensor fusion and environmental mapping, your vehicle gains a clearer understanding of its surroundings, reducing the risk of accidents. AI cameras detect obstacles, pedestrians, and road signs with precision, enabling timely reactions. This integration creates a layered safety system that adapts to dynamic conditions. Additionally, understanding the regulatory environment surrounding autonomous vehicle technology helps optimize deployment strategies and compliance measures. Feel the confidence as your vehicle:

- *Anticipates hazards before they happen*

- *Responds instantly to sudden changes*

- *Navigates complex environments safely*

- *Prevents collisions with real-time alerts*

- *Ensures your journey remains secure and smooth*

Benefits of Early Hazard Identification

Early hazard identification through AI cameras allows you to address potential dangers before they escalate into accidents. By enabling hazard anticipation, these cameras give you the chance to react swiftly and prevent incidents. Recognizing hazards early helps you maintain a safer environment, reducing the likelihood of collisions and injuries. The proactive detection system ensures you’re alerted to obstacles or risky behaviors in real time, allowing timely intervention. This early warning capability not only enhances safety but also minimizes damage and downtime. With AI-driven hazard detection, you gain an essential advantage in accident prevention, fostering trust and confidence in your safety measures. Additionally, understanding the importance of storage tips for essential oils can help maintain safety and efficacy in handling hazardous substances. Ultimately, early hazard identification empowers you to respond proactively, creating a safer space for everyone involved.

Challenges and Limitations of AI-Based Detection

While AI cameras offer significant advantages in collision prevention, they also face notable challenges and limitations. Sensor limitations can cause blind spots or misdetections, especially in complex environments. Environmental challenges like poor lighting, weather conditions, or clutter can degrade performance, making it harder to identify obstacles accurately. These issues could lead to delayed responses or false alarms, risking safety. You might feel frustrated when technology doesn’t perform as expected, or worried about relying solely on AI in critical moments. Additionally, the accuracy of obstacle detection can be affected by dog names, which might introduce unpredictability in dynamic settings. – Frustration over missed detections – Anxiety about unpredictable environmental factors – Disappointment from false alarms – Fear of overdependence on imperfect tech – Concerns about safety gaps during adverse conditions

Future Developments in Obstacle Recognition Technology

Advancements in obstacle recognition technology are poised to substantially enhance AI camera capabilities, addressing current limitations and improving safety. Future developments will likely integrate predictive maintenance, allowing systems to anticipate hardware failures before they occur, ensuring consistent performance. Additionally, environmental sensing will become more sophisticated, enabling cameras to better interpret complex surroundings, such as weather conditions or dynamic lighting. These improvements will allow AI to distinguish obstacles more accurately and adapt in real-time. As a result, collision prevention will become more reliable and proactive, reducing false positives and enhancing overall safety. By combining predictive maintenance with advanced environmental sensing, obstacle recognition technology will evolve to provide smarter, more responsive solutions that keep both pedestrians and drivers safer. Furthermore, incorporating personal development techniques like mindfulness and goal setting into AI system design can optimize performance and adaptability.

Impact on Urban Mobility and Public Safety

AI cameras for collision prevention are transforming urban mobility by making streets safer and more efficient. They enhance pedestrian safety by quickly detecting and responding to potential hazards, reducing accidents and injuries. Improved traffic flow results from real-time adjustments that prevent congestion and minimize delays. With smarter monitoring, you experience fewer disruptions and a more predictable commute. These advancements create a safer environment for everyone, encouraging walking and cycling while reducing vehicle collisions. Additionally, trustworthy AI systems ensure consistent performance and help build public confidence in these safety measures.

- Feel more confident crossing busy intersections

- Witness smoother traffic moving through your neighborhood

- Experience fewer close calls and accidents

- Enjoy safer streets for children and elders

- Observe communities becoming more liveable and connected

Frequently Asked Questions

How Do AI Cameras Differentiate Between Movable and Stationary Obstacles?

You might wonder how AI cameras tell apart movable from stationary obstacles. They use sensor calibration to accurately interpret data, ensuring reliable obstacle classification. When the camera detects an object, it analyzes its movement over time—if it moves, it’s classified as movable; if not, stationary. This dynamic process helps the system adapt in real-time, enabling safer navigation and collision prevention for vehicles and robots.

What Privacy Concerns Arise With Widespread Deployment of AI Obstacle Detection Systems?

You might worry about data privacy and surveillance concerns with widespread AI obstacle detection systems. These cameras constantly collect and analyze data, raising fears that personal information could be misused or accessed without consent. As these systems become more common, you could feel your movements are being overly monitored, leading to privacy breaches. It’s essential to balance the benefits of collision prevention with protecting individual rights and ensuring transparent data handling practices.

Can AI Cameras Recognize Obstacles Under Adverse Weather Conditions?

You might worry about AI cameras recognizing obstacles in bad weather, but advances in sensor calibration and weather adaptation help. These systems are designed to adjust to conditions like fog, rain, or snow, maintaining accuracy. By continuously refining their sensors, AI cameras improve obstacle detection even in adverse weather, ensuring safety. So, rest assured — with ongoing tech improvements, obstacle recognition remains reliable regardless of weather challenges.

How Do AI Systems Handle False Positives or Negatives in Obstacle Detection?

You might wonder how AI systems handle false positives or negatives during obstacle detection. They rely on sensor calibration to guarantee accuracy and use diverse datasets to train models for different scenarios. This helps minimize errors, but some false readings may still occur. Continuous updates and real-time adjustments improve reliability, so your AI camera gets better at recognizing real obstacles while ignoring false alarms, keeping you safer on the road.

What Training Data Is Used to Improve AI Obstacle Recognition Accuracy?

Think of AI training like a master artist perfecting a masterpiece. You provide the system with annotated datasets, meticulously labeled images and scenarios, to teach it what obstacles look like. Sensor calibration guarantees data accuracy, sharpening detection precision. By feeding diverse, real-world examples, you help the AI recognize obstacles reliably, reducing errors. This continuous learning process enhances obstacle recognition, making AI cameras smarter and safer in preventing collisions.

Conclusion

Think of AI cameras as vigilant guardians patrolling the streets, ever-watchful and ready to alert you of unseen dangers. They act like a lighthouse guiding ships safely through stormy waters, preventing collisions before they happen. As technology advances, these digital sentinels will become even smarter, steering us toward safer urban journeys. Embrace this evolution, because with AI at the helm, you’re steering a future where safety shines brighter than ever.